Working with AI in ChatUML

ChatUML's AI functionality is based on OpenAI's large language models (LLM). Each time you send an AI request, the following information is sent to the LLM API:

- The current diagram code

- The request message you want to send

- The previous chat messages between you and the AI assistant in the current diagram

As you continue to work on your diagram, the message history grows longer, so does the diagram code. You may reach the message count limit for the LLM.

Message Count and Token Limit

Your request will be broken down into tokens by the LLM so it can process and provide a response. Approximately, one token is equivalent to four letters in English. So, 100 tokens are approximately 75 words. And, 1500 words is approximately 2048 tokens.

The total number of tokens in your request, as well as the number of tokens in the LLM's response, are counted toward the token limit of the model. For example, if a model has a token limit of 4097 tokens, and your request takes up to 4000 tokens, you will only be able to receive at most 97 tokens in AI's response.

For a more detailed explanation about tokens, please see this page: https://help.openai.com/en/articles/4936856-what-are-tokens-and-how-to-count-them

This is why we have set a hard limit of five messages per diagram in ChatUML. Users who purchase the Standard Package will have a 20 message per diagram limit, and for the Pro Package, it's 50 messages per diagram.

Choosing AI Models

Each model has a different token limit. Models with larger token limits also respond more slowly but have better logical thinking abilities.

- GPT-3.5 Turbo 16k: 16k tokens, fastest response, not very good at logical thinking

- GPT-4: 8k tokens, slower response, very good at logical thinking

- GPT-4 Turbo 128k: 128k tokens, slower response, very good at logical thinking

Accuracy of AI Responses

Since AI models are excellent at logical thinking, their ability to generate error-free code is not as strong. In the context of ChatUML, the generated PlantUML code may contain some small syntax errors occasionally.

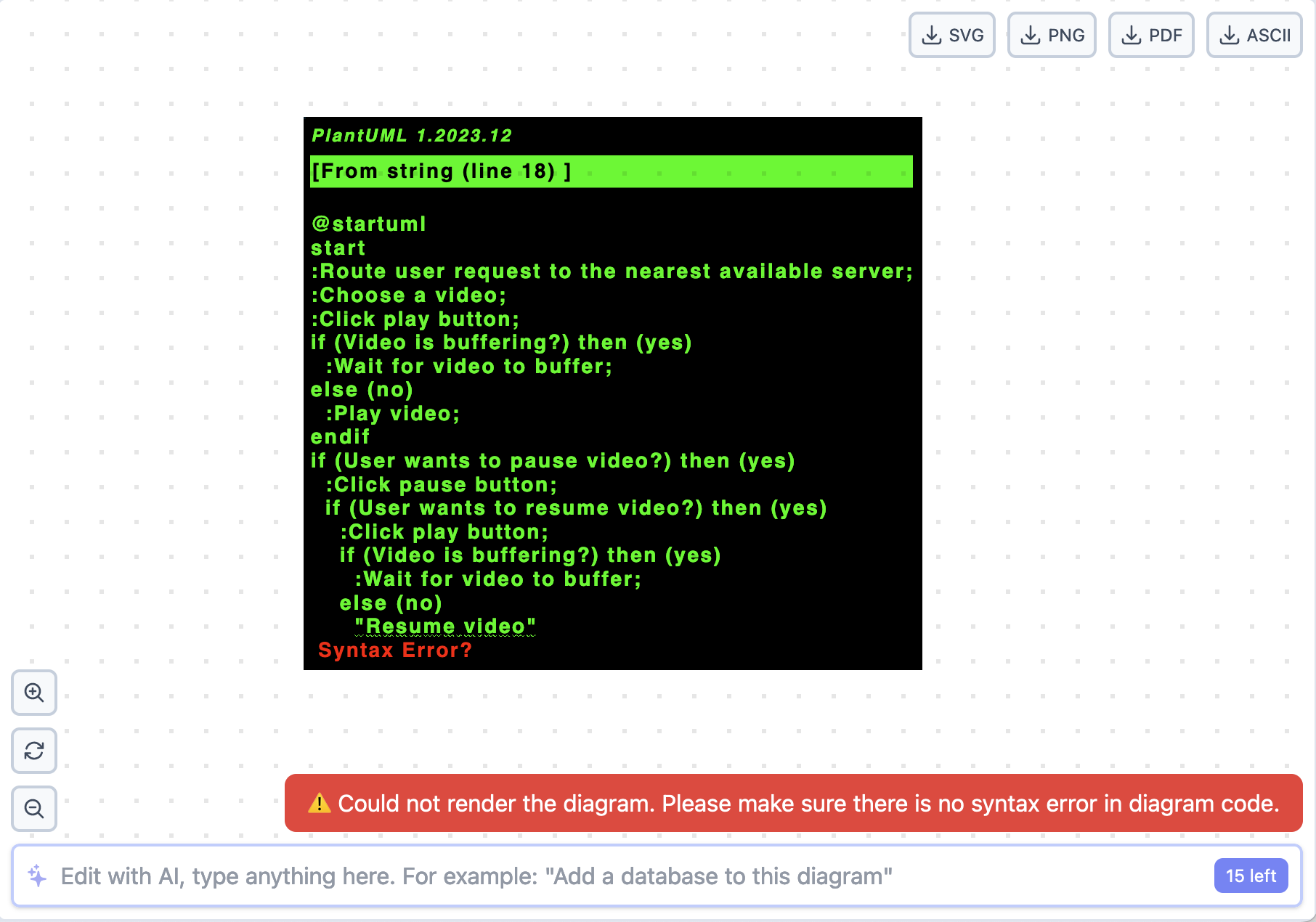

When this happens, the diagram renderer will display an error message, like this:

The best course of action in this case is to correct the diagram code manually, and you may need some PlantUML knowledge to do so.

Please note that while you can also ask AI to fix the code for you, it's not recommended. The outcome is unpredictable, and you might lose credit without getting any good result. If you still want to proceed, make sure you provide as much information about the error shown on the diagram in the AI request.

Please see Dealing with syntax errors document for more details.